Wesley C. Salmon, Logic (1984).

CHAPTER THREE

Induction

Induction

Inductive arguments, unlike deductive arguments, provide conclusions whose content exceeds that of their premises. It is precisely this characteristic that makes inductive arguments useful; at the same time, it gives rise to extremely difficult philosophical problems in the analysis of the concept of inductive support. In spite of these difficulties we can -- without becoming involved in great philosophical subtleties -- set out and examine some important forms of inductive argument and some common inductive fallacies. Beginning with the simplest kind of inductive generalization, we shall consider a number of different types of inductive arguments, including analogy, causal reasoning, and the confirmation of scientific hypotheses. Arguments of these sorts underlie almost all of our knowledge, from the most basic levels of common sense to rather sophisticated realms of science.

The fundamental purpose of arguments inductive or deductive, is to establish true conclusions on the basis of true premises. We want our arguments to have true conclusions if they have true premises. As we have seen, valid deductive arguments necessarily have that characteristic. Inductive arguments, however, have another purpose as well. They are designed to establish conclusions whose content goes beyond the content of the premises. To do this, inductive arguments must sacrifice the necessity of deductive arguments. Unlike a valid deductive argument, a logically correct inductive argument may have true premises and a false conclusion. Nevertheless, even though we cannot guarantee that the conclusion of an inductive argument is true if the premises are true, still, the premises of a correct inductive argument do support or lend weight to the conclusion. In other words, as we said in section 4, if the premises of a valid deductive argument are true, the conclusion must be true; if the premises of a correct inductive argument are true, the best we can say is that the conclusion is probably true.

As we also pointed out in section 4, deductive arguments are either completely valid or else totally invalid; there are no degrees of partial validity. We shall reserve the term "valid" for application to deductive arguments: we shall continue to use the term "correct" to evaluate inductive arguments. There are certain errors that can render inductive arguments either absolutely or practically worthless. We shall refer to these errors as inductive fallacies. If an inductive argument is fallacious, its premises do not support its conclusion. On the other hand, among correct inductive arguments there are degrees of strength or support. The premises of a correct inductive argument may render the conclusion extremely probable, moderately probable, or probable to some extent. Consequently, the premises of a correct inductive argument, if true, constitute reasons of some degree of strength, for accepting the conclusion.

There is another diflerence between inductive and deductive arguments that is closely related to those already mentioned. Given a valid deductive argument, we may add as many premises as we wish without destroying its validity. This fact is obvious. The original argument is such that, if its premises are true, its conclusion must be true; this characteristic remains no matter how many premises are added as long as the original premises are not taken away. By contrast, the degree of support of the conclusion by the premises of an inductive argument can be increased or decreased by additional evidence in the form of additional premises. Since the conclusion of an inductive argument may be false even though the premises are true, additional relevant evidence may enable us to determine more reliably whether the conclusion is, indeed, true or false. Thus, it is a general characteristic of inductive arguments, which is completely absent from deductive arguments, that additional evidence may be relevant to the degree to which the conclusion is supported. Where inductive arguments are concerned, additional evidence may have crucial importance.

In the succeeding sections of this chapter we shall discuss several correct types of inductive argument and several fallacies. Before beginning this discussion, it is important to mention a fundamental problem concerning inductive correctness. The philosopher David Hume (1711-1776), in A Treatise of Human Nature (1739-1749) and An Enquiry Concerning Human Understanding (1748), pointed to serious difficulties that arise in trying to prove the correctness of inductive arguments. At the present time there is still considerable controversy about this problem -- usually called "the problem of the justification of induction." Experts disagree widely about the nature of inductive correctness, about whether Hume's problem is a genuine one, and about the methods of showing that a particular type of inductive argument is correct.1 In spite of this controversy, there is a reasonable amount of agreement about which types of inductive argument are correct. We shall not enter into the problem of the justification of induction: rather, we shall attempt to characterize some of the types of inductive argument about which there is fairly general agreement.

1 My dialogue, "An Encounter with David Hume," in Joel Feinberg, ed.. Reason and Responsibility, 3rd ed. (New York: Wadsworth Publishing Co., 1975) attempts to set forth in elementary terms Hume's problem of the justification of induction and place it in a modern context. See also my Foundations of Scientific Inference (Pittsburgh, Pa.: University of Pittsburgh Press, 1967) for an introductory treatment of this and other problems lying at the basis of inductive logic.

By far the simplest type of inductive argument is induction by enumeration. In arguments of this type, a conclusion about all of the members of the class is drawn from premises that refer to observed members of that class.

| a] | Suppose we have a barrel of coffee beans. After mixing them up, we remove a sample of beans, taking parts of the sample from different parts of the barrel. Upon examination, the beans in the sample are all found to be grade A. We then conclude that all of the beans in the barrel are grade A. |

This argument may be written as follows:

| b] | All beans in the observed sample are grade A. |

| ∴ | All beans in the barrel are grade A. |

The premise states the information about the observed members of the class of beans in the barrel; the conclusion is a statement about all of the members of that class. It is a generalization based upon the observation of the sample.

It is not essential for the conclusion of an induction by enumeration to have the form "All F are G." Frequently, the conclusion will state that a certain percentage of F are G. For example,

| c] | Suppose we have another barrel of coffee beans, and we take a sample from it as in a. Upon examination, we find that 75 percent of the beans in the sample are grade A. We conclude that 75 percent of all of the beans in the barrel are grade A. |

This argument is similar to b.

| d] | 75 percent of the beans in the observed sample are grade A. |

| ∴ | 75 percent of the beans in the barrel are grade A. |

Both b and d share the same form. Since "all" means "100 percent," the form of both arguments may be given as follows:

| e] | Z percent of the observed members of F are G. |

| ∴ | Z percent of F are G. |

This is the general form of induction by enumeration. If the conclusion . "100 percent of F are G" (i.e., "All F are G") or "0 percent of F are G" (i.e., "No F are G"), it is a universal generalization. If Z is some percentage other than 0 or 100, the conclusion is a statistical generalization.

Here are some additional examples of induction by enumeration.

| f] | A public opinion pollster questions 5,000 people in the United States to ascertain their opinions about the desirability of a constitutional amendment prohibiting abortion. Of those questioned, 62 percent are opposed. The pollster concludes that approximately 62 percent of the people in the United States oppose adoption of such an amendment. |

| g] | In a certain factory, there is a machine that produces can openers. An inspector examines one-tenth of all the can openers produced by this machine. In this sample, the inspector finds that 2 percent of the can openers are defective. The management concludes, on the basis of this information, that 2 percent (approximately) of the can openers produced by the machine are defective. |

| h] | A great deal of everyday commonsense learning from experience consists in making inductions by enumeration. All observed fires have been hot; we conclude that all fires are hot. Every instance of drinking water when one is thirsty has resulted in the quenching of thirst; future drinking of water when thirsty will result in the quenching of thirst. Every lemon so far tasted has been sour; future lemons will taste sour. |

It is evident that induction by enumeration can easily yield false conclusions from true premises. For example,

| i] | Europeans had, for centuries before the discovery of Australia, observed countless swans, and all that they had ever seen were white. They concluded, quite reasonably that all swans are white. This inductive generalisation turned out to be false, for black swans were found in Australia. |

We should not be surprised that such failures of induction by enumeration sometimes occur, for this possibility is characteristic of all types of inductive argument. All we can do is try to construct our inductive arguments in a way that will minimize the chances of false conclusions from true premises. In particular, there are two specific ways to lessen the chances of error in connection with induction by enumeration; that is, there are two inductive fallacies to be avoided. They will be taken up in the next two sections.

The fallacy of insufficient statistics is the fallacy of making an inductive generalization before enough data have been accumulated to warrant the generalization. It might well be called "the fallacy of jumping to a conclusion."

| a] | In examples a and c of the preceding section, suppose the observed samples had each consisted of only four coffee beans. These surely would not have been enough data to make a reliable generalization. On the other hand, a sample of several thousand beans would be large enough for a much more reliable generalization. |

| b] | A public opinion pollster who interviewed only ten people could hardly be said to have enough evidence to warrant any conclusion about the general climate of opinion in the nation. |

| c] | A person who refuses to buy an automobile of a certain make because he knows someone who owned a "lemon" is probably making a generalization, on the basis of exceedingly scanty evidence, about the frequency with which the manufacturer in question produces defective automobiles. |

| d] | People who are prone to prejudice against racial, religious, or national minorities are often given to sweeping generalizations about all of the members of a given group on the basis of observation of two or three cases. |

It is easy to see that the foregoing examples are all too typical of mistakes made every day by all kinds of people. The fallacy of insufficient statistics, is a common one indeed. It is closely related to the post hoc fallacy (see section 29).

It would be convenient if we could set down some definite number and say that we always have enough data if the examined instances exceed this number. Unfortunately, this cannot be done. The number of instances that constitute sufficient statistics varies from case to case, from one area of investigation to another. Sometimes two or three instances may be enough; sometimes millions may be required. How many cases are sufficient can be learned only by experience in the particular area of investigation under consideration.

There is another factor that influences the problem of how many cases are needed. Any number of instances constitutes some evidence; the question at issue is whether we have sufficient evidence to draw a conclusion. This depends in part upon what degree of reliability we desire. If very little is at stake -- if it does not matter much if we are wrong -- then we may be willing to generalize on the basis of relatively few instances. If a great deal is at stake, then we require much more evidence.

It is important not only to have a large enough number of instances but also to avoid selecting them in a way that will prejudice the outcome. If inductive generalizations are to be reliable, they must be based upon representative samples. Unfortunately, we can never be sure that our samples are genuinely representative, but we can do our best to avoid unrepresentative ones. The fallacy of biased statistics consists of basing an inductive generalization upon a sample that is known to be unrepresentative or one that there is good reason to suspect may be unrespresentative.

| a] | In example a of section 20; it was important to mix up the beans in the barrel before selecting our sample; otherwise there would be danger of getting an unrepresentative sample. It is entirely possible that someone might have filled the barrel almost full of low-quality beans, putting a small layer of high-quality beans on top. By mixing the barrel thoroughly we overcome the danger of taking an unrepresentative sample for a reason of that kind. |

| b] | Many people disparage the ability of the weather forecaster to make accurate predictions. Perhaps the forecaster has a legitimate complaint when she says, "When I'm right no one remembers; when I'm wrong no one forgets." |

| c] | Racial, religious, or national prejudice is often bolstered by biased statistics. An undesirable characteristic is attributed to a minority group. Then all cases in which a member of the group manifests this characteristic are carefully noted and remembered, whereas those cases in which a member of the group fails to manifest the undesirable characteristic are completely ignored. |

| d] | Francis Bacon (1561-1626) gives a striking example of biased statistics in the following passage: "The human understanding when it has once adopted an opinion (either as being the received opinion or as being agreeable to itself) draws all things else to support and agree with it. And though there be a greater number and weight of instances to be found on the other side, yet these it either neglects and despises, or else by some distinction sets aside and rejects; in order that by this great and pernicious predetermination the authority of its former conclusions may remain inviolate. And therefore it was a good answer that was made by one who when they showed him hanging in a temple a picture of, those who had paid their vows as having escaped shipwreck, and would have him say whether he did not now acknowledge the power of the gods, -- 'Aye,' asked he again, 'But where are they painted that were drowned after their vows?' And such is the way of all superstition, whether in astrology, dreams, omens, divine judgments, or the like; wherein men, having a delight in such vanities, mark the events where they are fulfilled, but where they fail, though this happen much oftener, neglect and pass then by."2 |

What Bacon noticed centuries ago still happens frequently today:

| e] | The Pittsburgh Post-Gazette, on June 22, 1982, carried a front-page story about a couple who had won a large prize in the Pennsylvania lottery. "Dorothy Thomas had discovered earlier in the day that she and her husband were the winners of last week's Pennsylvania Lotto game and its $5.5 million prize, the largest sum ever won in a lottery in the United States. |

"Mrs. Thomas literally consulted the stars in selecting the six winning numbers.

" 'I just picked the birth dates for our four kids, my husband and myself,' she said.

"For the record, it was a combination of two Capricorns and one each Gemini, Libra, Taurus and Virgo. . . . So much for the disrepute of astrology and its practitioners." (Italics added.)

| f] | In 1936 the Literary Digest conducted a pre-election poll to predict the outcome of the Roosevelt-Landon contest. About ten million ballots were sent out and over two-and-a-quarter million were returned. The Literary Digest poll did not commit the fallacy of insufficient statistics, for the number of returns constitutes an extremely large sample. However, the results were disastrous. The poll predicted a victory for Landon and forecast only 80 percent of the votes Roosevelt actually received. Shortly thereafter, the magazine and its poll, which had cost about a half-million dollars, folded. There were major sources of bias. First, the names of people to be polled were taken mainly from lists of telephone subscribers and lists of automobile registrations. Other studies showed that 59 percent of telephone subscribers and 56 percent of automobile owners favored Landon, whereas only 18 percent of those on relief favored him. Second, there is a bias in the group of people who voluntarily returned questionnaires mailed out to them. This bias probably reflects the difference in economic classes which was operating in the first case. Even in those cases in which the Literary Digest used lists of voter registrations, the returns showed a strong bias for Landon.3 |

The most blatant form of the fallacy of biased statistics occurs when one simply closes one's eyes to certain kinds of evidence, usually evidence unfavorable to a belief one holds. Examples b and c illustrate the fallacy in this crude form. In other cases, especially f, subtler issues are involved. However, there is one procedure generally designed to lessen the chances of getting a sample that is not representative. The instances examined should differ as widely as possible, as long as they are relevant. If we are concerned to establish a conclusion of the form "Z percent of F are G," then our instances, to be relevant, must all be members of F. One way to attempt to avoid a biased sample is to exarmine as wide a variety of members of F as possible. In addition, if we can know what percentage of all the members of F are of various kinds, we can see to it that our sample reflects the makeup of the whole class. This is what many public opinion polls try to do.

| g] | In order to predict the outcome of an election, a public opinion poll will interview a certain number of rural voters and a certain number of urban voters; a certain number of upper-class, middle-class, and lower-class voters; a certain number of voters from the different sections of the country; etc. In this way a variety of instances is accumulated, and furthermore, this variety in the sample reflects the percentages in the makeup of the whole voting population. |

We have discussed the fallacies of insufficient statistics and biased statistics as errors to be avoided in connection with induction by enumeration. Essentially the same sort of fallacy can occur with any sort of inductive argument. It is always possible to accept an inductive conclusion on the basis of too little evidence, and it is always possible for inductive evidence to be biased. We must, therefore, be on the lookout for these fallacies in all kinds of inductive arguments.

2 Francis Bacon, Novum Organum, aphorism xlvi. Italics added.

3 See Mildred Parten, Surveys, Polls, and Samples (New York: Harper & Row, Publishers, Inc., 1950), pp. 24f and 392f. Adapted by permission.

If often happens that a conclusion established by one argument is used as a premise in another argument. In example a of section 20 we concluded, using induction by enumeration, that all the coffee beans in a certain barrel are grade A. Using that conclusion as a premise of a quasi-syllogism (section 14), we may conclude that the next coffee bean drawn from the barrel will be grade A.

| a] | All beans in the barrel are grade A.

The next bean to be drawn from the barrel is a bean in the barrel. |

| ∴ | The next bean to be drawn from the barrel is grade A. |

In this example, the conclusion of the previous induction by enumeration is a universal generalization. However, if the conclusion of the previous induction is a statistical generalization, we obviously cannot construct the same type of deductive argument. In this case, we can construct an inductive argument of the type called statistical syllogism (because of a resemblance to categorical syllogism). In example c of section 20 we concluded, using induction by enumeration, that 75 percent of the coffee beans in a certain barrel are grade A. We can use this conclusion as a premise and construct the following argument:

| b] | 75 percent of the beans in the barrel are grade A.

The next bean to be drawn from the barrel is a bean in the barrel. |

| ∴ | The next bean to be drawn from the barrel is grade A. |

Obviously, the conclusion of argument b could be false even if the premises are true. Nevertheless, if the first premise is true and we use the same type of argument for each of the beans in the barrel, we will get a true conclusion in 75 percent of these arguments and a false conclusion in only 25 percent of them. On the other hand, if we were to conclude from these premises that the next bean to be drawn will not be grade A, we would get false conclusions in 75 percent of such arguments and true conclusions in only 25 percent of them. Clearly it is better to conclude that the next bean will be grade A than to conclude that it will not be grade A. (Even if we are not willing to assert that the next bean will be grade A, we could reasonably be prepared to bet on it at odds somewhere near three to one.) The form of the statistical syllogism may be represented as follows:

| c] | Z percent of F are G.

x is F. |

| ∴ | x is G. |

The strength of the statistical syllogism depends upon the value of Z. If Z is very close to 100, we have a very strong argument; that is, the premises very strongly support the conclusion. If Z equals 50, the premises offer no support for the conclusion, for the same premises would equally support the conclusion "x is not G." If Z is less than 50, the premises do not support the conclusion; rather, they support the conclusion "x is not G." If Z is close to zero, the premises offer strong support for the conclusion "x is not G."

The first premise of a statistical syllogism may be stated in terms of an exact numerical value for Z, but in many cases it will be a less exact statement. The following kinds of statement are also acceptable as first premises in statistical syllogisms:

| d] | Almost all F are G.

The vast majority of F are G. Most F are G. A high percentage of F are G. There is a high probability that an F is a G. |

You may feel uneasy about arguments like b in which the conclusion is given without qualification; perhaps you feel that the conclusion should read, "The next bean to be drawn from the barrel is probably grade A." In order to deal with this problem, reconsider argument a, which might be rendered less formally:

| e] | Since all of the beans in the barrel are grade A, the next bean to be drawn from the barrel must be grade A. |

Obviously, there is no necessity in the mere fact of the next bean being grade A. As we noted earlier, a verb form like "must be" serves to indicate that a statement is the conclusion of a deductive argument. The necessity it indicates is this: If the premises are true, the conclusion must be true. The conclusion of the deductive argument is "The next bean to be drawn from the barrel is grade A", not "The next bean to be drawn from the barrel must be grade A." "Must be" signifies a certain relationship between premises and conclusion; it is not part of the conclusion by itself. Similarly, argument b might be informally stated,

| f] | Since 75 percent of the beans in the barrel are grade A, the next bean to be drawn from the barrel is probably grade A. |

In this case, the term "probably" indicates that an inductive conclusion is being given. Just as "must be" signifies a deductive relation between premises and conclusion in e, so does "probably" signify an inductive relation between premises and conclusion in f. Just as "must be" is not part of the conclusion itself, so is "probably" not part of the conclusion itself.

This point can be further reinforced. Consider the following statistical syllogism:

| g] | The vast majority of 35-year-old American men will survive for three more years.

Henry Smith is a 35-year-old American man. |

| ∴ | Henry Smith will survive for three more years. |

Suppose, however, that Henry Smith has an advanced case of lung cancer. Then we can set forth the following statistical syllogism as well:

| h] | The vast majority of people with advanced cases of lung cancer will not survive for three more years.

Henry Smith has an advanced case of lung cancer. |

| ∴ | Henry Smith will not survive for three more years. |

The premises of g and h could all be true; they are not incompatible with each other. The conclusions of g and h contradict each other. This situation can arise only with inductive arguments. If two valid deductive arguments have compatible premises, they cannot have incompatible conclusions. The situation is not noticeably improved by adding "probably" as a qualifier in the conclusions of g and h. It would still be contradictory to say that Henry Smith probably will, and probably will not, survive for three more years. Given two arguments like g and h, which should we accept? Both arguments have correct inductive form and both have true premises. However, we cannot accept both conclusions, for to do so would be to accept a self-contradiction. The difficulty is that neither g nor h embodies all the relevant evidence concerning the survival of Henry Smith. The premises of g state only part of our evidence, and the premises of A state only part of our evidence. However, it will not be sufficient simply to combine the premises of g and h to construct a new argument:

| i] | The vast majority of 35-year-old American men will survive for three more years.

The vast majority of people with advanced cases of lung cancer will not survive for three more years. Henry Smith is a 35-year-old American man with an advanced case of lung cancer. |

| ∴ | ? |

From the premises of i we cannot draw any conclusion, even inductively, concerning Henry Smith's survival. For all we can conclude, either deductively or inductively, from the first two premises of i, 35-year-old American men may be an exceptional class of people with respect to lung cancer. The vast majority of them may survive lung cancer, or the survival rate may be about 50 percent. No conclusion can be drawn. However, we do have further evidence. We know that 35-year-old American men are not so exceptional; the vast majority of 35-year-old American men with advanced cases of lung cancer do not survive for three more years. Thus, we can set up the following statistical syllogism:

| j] | The vast majority of 35-year-old American men with advanced cases of lung cancer do not survive for three more years.

Henry Smith is a 35-year-old American man with an advanced case of lung cancer. |

| ∴ | Henry Smith will not survive for three more years. |

Assuming that the premises of j embody all the evidence we have that is relevant, we may accept the conclusion of j.

We can now see the full force of the remark in section 19 to the effect that additional evidence is relevant to inductive arguments in a way in which it is not relevant to deductive arguments. The conclusion of a deductive argument is acceptable if (1) the premises are true and (2) the argument has a correct form. These two conditions are not sufficient to make the conclusion of an inductive argument acceptable; a further condition must be added. The conclusion of an inductive argument is acceptable if (1) the premises are true, (2) the argument has a correct form, and (3) the premises of the argument embody all available relevant evidence. This last requirement is known as the requirement of total evidence. Inductive arguments that violate condition 3 commit the fallacy of incomplete evidence.

A frequent method of attempting to support a conclusion is to cite some person, institution, or writing that asserts that conclusion. This type of argument has the form

| a] | x asserts p. |

| ∴ | p. |

As this form stands, it is clearly fallacious. Nevertheless, there are correct uses of authority as well as incorrect ones. It would be a sophomoric mistake to suppose that every appeal to authority is illegitimate, for the proper use of authority plays an indispensable role in the accumulation and application of knowledge. If we were to reject every appeal to authority, we would have to maintain, for example, that no one is ever justified in accepting the judgment of a medical expert concerning an illness. Instead, one would have to become a medical expert oneself, and one would face the impossible task of doing so without relying on the results of any other investigators. Instead of rejecting the appeal to authority entirely, we must attempt to distinguish correct from incorrect appeals.

As a matter of fact, we frequently do make legitimate uses of authority. We consult textbooks, encyclopedias, and experts in various fields. In these cases the appeal is justified by the fact that the authority is known to be honest and well informed in the subject under consideration. We often have good grounds for believing that the authority is usually correct. Finally -- and this is a crucial point -- the expert is known to have based his or her judgment upon objective evidence which could, if necessary, be examined and verified by any competent person. Under these conditions, we shall say that the authority is reliable. The appeal to a reliable authority is legitimate, for the testimony of a reliable authority is evidence for the conclusion. The following form of argument is correct:

| b] | x is a reliable authority concerning p.

x asserts p. |

| ∴ | p. |

This form is not deductively valid, for the premises could be true and the conclusion false. Reliable authorities do sometimes make errors. It is, however, inductively correct, for it is a special case of the statistical syllogism. It could be rewritten as follows:

| c] | The vast majority of statements made by x concerning subject S are true.

p is a statement made by x concerning subject S. |

| ∴ | p is true. |

There are a number of ways in which the argument from authority can be misused.

1. The authority may be misquoted or misinterpreted. This is not a logical fallacy but a case of an argument with a false premise; in particular, the second premise, of b is false.

| d] | The authority of Einstein is sometimes summoned to support the theory that there is no such thing as right or wrong except insofar as it is relative to a particular culture. It is claimed that Einstein proved that everything is relative. As a matter of fact, Einstein expounded an important physical theory of relativity, but his theory says nothing whatever about cultures or moral standards. This use of Einstein as an authority is a clear case of misinterpretation of the statements of an authority. |

When a writer cites an authority, the accepted procedure is to document the source so that the readers can, if they wish, check the accuracy of transmission.

2. The authority may have only glamour, prestige, or popularity; even if he or she has competence in some related field of learning, that does not constitute a requirement that such an "authority" must fulfill.

| e] | Testimonials of movie stars and athletes are used to advertise breakfast cereals. |

The aim of such advertising is to transfer the glamour and prestige of these people to the product being advertised. No appeal to evidence of any kind is involved; this is a straightforward emotional appeal. It is, of course, essential to distinguish emotional appeals from logical arguments. Although some athletes who make claims about the nutritional superiority of breakfast foods may have considerable knowledge of human physiology and nutrition, by no means all of them do. What is more important, whether they have knowledge of this sort or not, such expertise is not a qualification for making these endorsements, and the advertising appeal is to athletic prowess rather than scientific knowledge. Insofar as any argument is involved in such testimonials, it is fallacious, for it has the form a rather than the form b. Likewise, when an appeal to authority is made to support a conclusion (rather than to advertise a product), it may be merely an attempt to transfer prestige from the authority to the conclusion.

| f] | As the president of Consolidated Amalgamated said in a recent speech, the Fifth Amendment to the Constitution, far from being a safeguard of freedom, is a threat to the very legal and political institutions that guarantee us our freedoms. |

An industrial magnate like the president of Consolidated Amalgamated is a person of great prestige but one who can hardly be expected, by virtue of that position, to be an expert in jurisprudence and political theory. Transferring personal prestige to a conclusion is not the same as giving evidence that it is true. This misuse of the argument from authority is clearly an appeal to emotion.

3. Experts may make judgments about something outside their special fields of competence. This misuse is closely akin to the preceding one. The first premise required in b is "x is a reliable authority concerning p." Instead, a different premise is offered, namely, "x is a reliable authority concerning something" (which may have nothing to do with p).

| g] | Einstein is an excellent authority in certain branches of physics, but he is not thereby a good authority in other areas. In the field of social ethics he made many pronouncements, but his authority as a physicist does not carry over. |

Again, a transfer of prestige is involved. Einstein's great prestige as a physicist is attached to his statements on a variety of other subjects.

4. Authorities may express opinions about matters concerning which they could not possibly have any evidence. As we pointed out, one of the conditions of reliable authorities is that their judgments be based upon objective evidence. If p is a statement for which x could not have evidence, then x cannot be a reliable authority concerning p. This point is particularly important in connection with the pronouncements of supposed authorities in religion and morals.

| h] | Moral and religious authorities have often said that certain practices, such as sodomy, are contrary to tfye will of God. It is reasonable to ask how these persons, or any others, could possibly have evidence about what God wills. It is not sufficient to answer that this pronouncement is based upon some other authority, such as a sacred writing, a church father, or an institutional doctrine. The same question is properly raised about these latter authorities as well. |

In this example, also, there is great danger that the appeal to authority is an emotional appeal rather than an appeal to any kind of evidence. In any case, the point is that appeals to authority cannot be supported indefinitely by further appeals to other authorities. At some stage the appeal to authority must end, and evidence of some other sort must be taken into account.

5. Authorities who are equally competent, as far as we can tell, may disagree. In such cases there is no reason to place greater confidence in one than in the other, and people are apt to choose the authority that gives them the answer they want to hear. Ignoring the judgment of opposed authorities is a case of biasing the evidence. When authorities disagree it is time to reconsider the objective evidence upon which the authorities have supposedly based their judgments.

A special form of the argument from authority, known as the argument from consensus, deserves mention. In arguments of this type a large group of people, instead of a single individual, is taken as an authority. Sometimes it is the whole of humanity, sometimes a more restricted group. In either case, the fact that a large group of people agrees with a certain conclusion is taken as evidence that it is true. The same considerations that apply generally to arguments from authority apply to arguments from consensus.

| i] | There cannot be a perpetual motion machine; competent physicists are in complete agreement on this point. |

This argument may be rendered as follows:

| j] | The community of competent physicists is a reliable authority on the possibility of perpetual motion machines.

The community of competent physicists agrees that a perpetual motion machine is impossible. |

| ∴ | A perpetual motion machine is impossible. |

The argument from consensus is seldom as reasonable as j. More often it is a blatant emotional appeal.

| k] | Every right-thinking American knows that national sovereignty must be protected against the inroads of international organizations like the United Nations. |

The force of the argument, if it merits the name, is that a person who supports the United Nations is not a right-thinking American. There is a strong emotional appeal for many Americans to be members of the group of right-thinking Americans.

The classic example of an argument from consensus is an argument for the existence of God.

| l] | In all times and places, in every culture and civilization, people have believed in the existence of some sort of deity. Therefore, a supernatural being must exist. |

Two considerations must be raised. First, is there any reason for regarding the whole of humanity as a theological authority, even if the alleged agreement exists? Second, on what evidence has the whole of mankind come to the conclusion that God exists? As we have seen, reliable authorities must base their judgment upon objective evidence. In view of this fact, the argument from consensus cannot be the only ground for believing in the existence of God, for if it were it would be logically incorrect.

To sum up, arguments of form b are inductively correct, and those of form a are fallacious. Fallacious appeals to authority are usually appeals to emotion rather than appeals to evidence.

25. ARGUMENT AGAINST THE PERSON

The argument against the person4 is a type of argument that concludes that a statement is false because it was made by a certain person. It is closely related to the argument from authority, but it is negative rather than positive. In the argument from authority, the fact that a certain person asserts p is taken as evidence that p is true. In the argument against the person, the fact that a certain person asserts p is taken as evidence that p is false.

In analyzing the argument from authority, we saw that it could be put into an inductively correct form, a special case of the statistical syllogism. To do so, it was necessary to include a premise of the form "x is a reliable authority concerning p." We discussed the characteristics of reliable authorities. The argument against the person can be handled similarly. To accomplish this end we need an analogous premise involving the concept of a reliable anti-authority. A reliable anti-authority about a given subject is a person who almost always makes false statements about that subject. We have the following inductively correct argument form:

| a] | x is a reliable anti-authority concerning p.

x asserts p. |

| ∴ | Not-p (i.e., p is false). |

Like the argument from authority, this is also a special case of the statistical syllogism. It could be rewritten:

| b] | The vast majority of statements made by x concerning subject S are false.

p is a statement made by x concerning subject S. |

| ∴ | p is false. |

It must be emphasized that a reliable anti-authority is not merely someone who fails to be a reliable authority. A person who is not a reliable authority cannot be counted upon to be right most of the time. This is far different from being consistently wrong. An unreliable authority is a person who cannot be counted upon at all. The fact that such a person makes a statement is evidence for neither its truth nor its falsity.

Schema a is, as we have said, inductively correct, but whether it has any utility depends upon whether there are any reliable anti-authorities. It will be useless if we can never satisfy the first premise. Although there are not many cases in which we can say with assurance that a person is a reliable anti-authority, there does seem to be at least one kind of reliable anti-authority, namely, scientific cranks.5 They can be identified by several characteristics.

- They usually reject, in wholesale fashion, all of established science or some branch of it.

- They are usually ignorant of the science they reject.

- The accepted channels of scientific communication are usually closed to them. Their theories are seldom published in scientific journals or presented to scientific societies.

- They regard opposition of scientists to their views as a result of the prejudice and bigotry of scientific orthodoxy.

- Their opposition to established science is usually based upon a real or imagined conflict between science and some extrascientific doctrine -- religious, political, or moral.

A "scientific" theory propounded by a person who has the foregoing characteristics is very probably false.

Great scientific innovators propose theories that are highly unorthodox and they meet with strenuous opposition from the majority of scientists at the time. Nevertheless, they are not cranks, according to our criteria. For instance, highly original scientific theorists are, contrary to characteristic 2, thoroughly familiar with the theories they hope to supersede. Furthermore, we must note, deductive validity has not been claimed for schema a. The fact that a statement is made by a reliable anti-authority does not prove conclusively that it is false. We cannot claim with certainty that no scientific crank will ever produce a valuable scientific result.

Although the argument against the person does have the inductively correct form a, it is frequently misused. These misuses are usually substitutions of emotional appeal for logical evidence. Instead of showing that someone who makes a statement is a reliable anti-authority, the misuser vilifies the person by attacking that person's personality, character, or background. The first premise of a is replaced by an attempt to arouse negative feelings. For example,

| c] | In the 1930s the Communist party in Russia rejected the genetic theories of Gregor Mendel, an Austrian monk, as "bourgeois idealism." A party orator who said "The Mendelian theory must be regarded as the product of a monkish bourgeois mind" would be guilty of a fallacious use of the argument against the person. |

Clearly, the national, social, and religious background of the originator of a theory is irrelevant to its truth or falsity. Being an Austrian monk does not make Mendel a reliable anti-authority in genetics. The condemnation of the Mendelian theory on these grounds is an obvious case of arousing negative emotions rather than providing negative evidence. It is also an instance of the genetic fallacy (section 3). A subtler form of the same fallacy may be illustrated as follows:

| d] | Someone might claim that there is strong psychoanalytic evidence in Plato's philosophical writings that he suffered from an unresolved oedipal conflict and that his theories can be explained in terms of this neurotic element in his personality. It is then suggested that Plato's philosophical theories need not be taken seriously because they are thus explained. |

Even if we assume that Plato had an Oedipus complex, the question still remains whether his philosophical doctrines are true. They are not explained away on these psychological grounds. Having an Oedipus complex does not make anyone a reliable anti-authority.

Just as the argument from consensus is a special form of the argument from authority, similarly there is a negative argument from consensus which is a special form of the argument against the person. According to this form of argument, a conclusion is to be rejected if it is accepted by a group that has negative prestige. For example,

| e] | Chinese Communists believe that married women should have the right to use their own family names. |

| ∴ | Married women should be compelled to adopt the family names of their husbands. |

This argument is clearly an attempt to arouse negative attitudes toward some aspects of women's liberation.

There is one fundamental principle that applies both to the argument from authority and to the argument against the person. If there is objectively, a strong probability relation between the truth or falsity of a statement and the kind of person who made it, then that relation can be used in a correct inductive argument. It becomes the first premise in a statistical syllogism. Any argument from the characteristics of the person who made a statement to the truth or falsity of the statement, in the absence of such a probability relation, is invariably incorrect. These fallacious arguments are often instances of the genetic fallacy (section 3). Example c of section 3, as well as example c of this section, illustrates this point.

4 The argument against the person is closely related to, but not identical with the traditional arsumentum ad hominem. This departure from tradition is motivated by the symmetry between the argument from authority and the argument against the person, and by the fact that the argument against the person is reducible to statistical syllogism.

5 For interesting discussions of many scientific cranks, see Martin Gardner, Fads and Fallacies in the Name of Science (New York: Dover Publications, Inc., 1957).

Analogy, a widely used form of inductive argument, is based upon a comparison between objects of two different types. This is how it works. Objects of one kind are known to be similar in certain respects to objects of another kind. Objects of the first kind are known to have a certain characteristic; it is not known whether objects of the second kind have it or not. By analogy we conclude that, since objects of the two kinds are alike in some respects, they are alike in other respects as well. Therefore, objects of the second kind also have the additional property that those of the first kind are already known to possess. For example,

| a] | Canadian medical researchers conducted experiments upon rats to determine the effects of saccharin upon humans. They found that a significantly greater percentage of rats that had been given large quantities of saccharin developed cancer of the bladder than did those that had not been given any saccharin. By analogy, many experts have concluded that, since rats and humans are physiologically similar in various respects, saccharin poses a threat of bladder cancer to humans who use it as an artificial sweetener. It is for this reason that your favorite diet soft drink carries a warning.6 |

This kind of argument may be schematized as follows:

| b] | Objects of type X have properties G, H, etc.

Objects of type Y have properties G, H, etc. Objects of type X have property F. Objects of type Y have property F. |

In argument a, rats are objects of type X, and humans are objects of type Y. G, H, etc., are the physiological properties rats and humans have in common. F is the property of developing bladder cancer upon consumption of large quantities of saccharin. (The fact that only some, but not all, rats developed bladder cancer does not undermine the analogy; saccharin increases the chances of bladder cancer in rats, and so it is a reasonable inference that it will have the same effect upon humans.)

Like other kinds of inductive arguments, analogies may he strong or weak. The strength of an analogy depends principally upon the similarities between the two types of objects being compared. Any two kinds of objects are alike in many respects and unlike in many others. The crucial question for analogical arguments is this: Are the objects that are being compared similar in ways that are relevant to the argument? To whatever extent there are relevant similarities, the analogy is strengthened. To whatever extent there are relevant dissimilarities, the analogy is weakened. Rats and humans are very dissimilar in many ways, but the question at issue in a is a physiological one, so that the physiological similarities are important and the nonphysiological dissimilarities are unimportant to the particular argument.

It is not easy to state with precision, in a general way, what constitutes relevance or irrelevance of similarities or differences. The question is, what kinds of similarities or differences are likely to make a difference to the phenomenon under consideration? Our common sense tells us that the fact that both rats and humans are mammals is apt to be an important similarity where the physiological effects of saccharin are concerned. We might well wonder whether the fact that rats are four-legged animals whereas humans are two-legged could be relevant. We might be fairly confident that the possession of tails by rats and the lack of them in humans is irrelevant. We can be pretty sure that the fact that male rats do not wear neckties whereas some human males do is totally irrelevant. Of course, the scientists who conduct physiological experiments have a considerable body of background knowledge and experience that enables them to make informed judgments regarding relevance and irrelevance. Experimentation with laboratory animals is an established procedure in the biomedical sciences; its values and limitations are well known.

Here are some additional analogies.

| c] | Archaeologists, excavating a site where prehistoric people lived, find a number of stones that are similar in shape, and which could not have acquired that shape unless they had been fashioned by humans. Although these stones do not have attached handles, the archaeologists notice that they have about the same shape as axheads used by primitive people today. It is inferred, by analogy, that these stones served the prehistoric people as axheads. The handles are missing because the wood has rotted away. |

Archaeologists who make analogical inferences of this sort must attempt to evaluate both the similarities between the ancient stones and the modern axheads and also the similarities between the prehistoric culture and the primitive culture in which stone axes are used today.

| d] | Some pacifists have argued, along the following lines, that war can never be a means for bringing about peace, justice, or brotherhood. If you plant wheat, wheat comes up. If you plant corn, you get corn. If you plant thistles, you don't expect to get strawberries. Likewise, if you plant hatred and murder, you can't expect to get peace, justice, and brotherhood. "Fighting for peace is like fornicating for chastity" seems to be a concise formulation of this argument. |

The enormous dissimilarities between the kinds of "planting" in this example make a very weak analogy.

The design argument -- probably the most widely used argument for the existence of God -- is often given explicitly in the form of an analogy.

| e] | "I [Cleanthes] shall briefly explain how I conceive this matter. Look around the world: contemplate the whole and every part of it: you will find it to be nothing but one great machine, subdivided into an infinite number of lesser machines, which again admit of subdivisions, to a degree beyond what human senses and faculties can trace and explain. All these various machines, and even their most minute parts, are adjusted to each other with an accuracy, which ravishes into admiration all men, who have ever contemplated them. The curious adapting of means to ends, throughout all nature, resembles exactly, though it much exceeds, the productions of human contrivance; of human design, thought, wisdom, and intelligence. Since therefore the effects resemble each other we are led to infer, by all the rules of analogy, that the causes also resemble; and that the Author of Nature is somewhat similar to the mind of men; though possessed of much larger faculties, proportioned to the grandeur of the work, which he has executed. By this argument . . . alone, do we prove at once the existence of a Deity, and his similarity to human mind and intelligence."7 |

The evaluation of this analogy is a complex matter which we shall not undertake. Hume's Dialogues provide an extremely illuminating analysis of this argument. In the same place, Hume gives some additional examples.

| f] | "... whenever you depart, in the least, from the similarity of cases, you diminish proportionately the evidence; and may at last bring it to a very weak analogy, which is confessedly liable to error and uncertainty. After having experienced the circulation of the blood in human creatures, we make no doubt that it takes place in Titius and Maevius; but from its circulation in frogs and fishes, it is only a presumption, though a strong one, from analogy, that it takes place in men and other animals. The analogical reasoning is much weaker, when we infer the circulation of the sap in vegetables from our experience, that the blood circulates in animals; and those, who hastily followed that imperfect analogy, are found, by more accurate experiments, to have been mistaken."8 |

Analogical arguments abound in philosophical literature. We shall conclude by mentioning two additional examples of great importance.

| g] | Plato's dialogues contain an abundance of analogical arguments; the analogy is one of Socrates' favorite forms. In The Republic, for example, many of the subsidiary arguments that occur along the way are analogies. In addition, the major argument of the whole work is an analogy. The nature of justice in the individual is the main concern of the book. In order to investigate this problem, justice in the state is examined at length -- for this is justice "writ large." On the basis of an analogy between the state and the individual, conclusions are drawn concerning justice in the individual. |

| h] | Analogy has often been used to deal with the philosophical problem of other minds. The problem is this. One is directly aware of one's own state of consciousness, such as a feeling of pain, but one cannot experience another person's state of mind. If we believe, as we all do, that other people have experiences similar in many ways to our own, it must be on the basis of inference. The argument used to justify our belief in other minds is regarded as an analogy. Other people behave as if they experience thought, doubt, joy, pain and other states of mind. Their behavior is similar to our own manifestations of such mental states. We conclude by analogy that these manifestations are due, in others as in ourselves, to states of consciousness. In this way we seek to establish the existence of other minds besides our own. |

The argument by analogy illustrates once more the important bearing upon inductive arguments of information in addition to that given in the premises. To evaluate the strength of an analogy it is necessary to determine the relevance of the respects in which the objects of different kinds are similar. Relevance cannot be determined by logic alone -- the kind of relevance at issue in analogical arguments involves factual information. Biological knowledge must be brought to bear to determine what similarities and differences are relevant to a biological question such as example a. Anthropological information is required to determine the relevant similarities and differences with respect to an anthropological question such as example c. These arguments, like most inductive arguments, occur in the presence of a large background of general knowledge, and this general knowledge must be brought into consideration to evaluate the strength of analogies. Failure to take it into account is a violation of the requirement of total evidence.

6 For an excellent analysis of this example, see Ronald N. Giere, Understanding Scientific Reasoning (New York: Holt, Rinehart & Winston, 1979), chap. 12.

7 David Hume, Dialogues Concerning Natural Religion, Part II.

8 Ibid.

Our general background of scientific and commonsense knowledge includes information about a great many causal relations. Such knowledge serves as a basis for inferences from what we directly observe to events and objects that are not available for immediate observation, it enters into causal explanations, and it is a necessary adjunct to rational action. For example,

| a] | A body is fished out of the river, and the medical examiner performs an autopsy to determine the cause of death. By examining the contents of the lungs and stomach, analyzing the blood, and inspecting other organs, she finds that death was caused, not by drowning, but by poison. Her conclusion is based on extensive knowledge of the physical effects or various substances, such as arsenic and water. |

This is a case of inferring the causes when the effects have been observed. Conversely, there are cases in which effects are inferred from observed causes.

| b] | A ranger observes lightning striking a dry forest. On the basis of his causal knowledge, he infers that a fire will ensue. |

Causal knowledge also enables us to exercise some degree of control over events that occur in the world. Sometimes, for example, it is within our power to bring about a cause that will, in turn, produce a desired effect.

| c] | The highway department spreads salt on icy roads to cause the ice to melt, thereby reducing the risk of highway accidents. |

In other cases, our aim is to prevent occurrences of an undesirable sort. Causal knowledge makes this possible as well.

| d] | Careful investigations are conducted to ascertain the causes of airline crashes in order to prevent such accidents from happening in the future. In a recent case, for example, an airplane taking off from Washington National Airport failed to gain sufficient altitude to clear a bridge and crashed into the Potomac River. Preliminary investigation suggested that the cause was a buildup of ice on the surfaces of the wings. Since airplanes derive their lift from the passage of air over wings having the shape of airfoils, an accumulation of ice that radically alters the shape of the wing surfaces can deprive the airplane of its lift. It is not, incidentally, the weight of the ice that causes the problem; it is the change in shape of the cross-section of the wing that is at fault. More careful de-icing immediately before take off could prevent accidents of this type in the future. |

In example c, as in b, there is an inference from cause to effect on the basis of a knowledge of causal relations. In example d, as in a, the inference is from effect to cause. Moreover, in each of the foregoing examples we have an explanation of the effect. The victim died because poison was ingested, the fire broke out because lightning struck, the ice on the road melted because salt was spread upon it, and the airplane crashed because ice had collected on its wings.

Regardless of the purposes of such inferences, the reliability of the conclusions depends upon the existence of certain causal relations. If, for purposes of logical analysis, we transform these inferences into arguments, we must include premises stating that the appropriate causal relations hold. Consider the following argument:

| e] | Mrs. Smith was frightened by bats during her pregnancy. |

| ∴ | Mrs. Smith's baby will be "marked." |

As it stands, this argument is neither deductively valid nor inductively correct, for it needs a premise stating that there is a causal relation between being frightened and having a "marked" baby. '

| f] | If an expectant mother is frightened, it will cause her baby to be "marked."

Mrs. Smith was frightened by bats during her pregnancy. |

| ∴ | Mrs. Smith's baby will be "marked." |

Now the argument is logically correct; in fact, it is deductively valid. Let us give a similar reconstruction of example b.

| g] | If a bolt of lightning strikes a dry forest, it will ignite the dry wood and cause a fire to start.

A bolt of ligtning has just struck a dry forest. |

| ∴ | A fire will start. |

This argument is also deductively valid

It will be useful for our discussion of causality to note that arguments f and g have premises of two different kinds. The first is a general causal assertion about what happens whenever a condition of a certain kind occurs.

In example f, the first premise makes a claim (which is, of course, false) about what happens when a pregnant woman is frightened. It is stated in a conditional form, but to emphasize the fact that it is not just an ordinary material conditional, the causal relation is explicitly mentioned. The second premise is a particular statement about something that happened to a particular person. Example g has premises of the same two types, but in this latter example, the general premise is at least plausible.

In all of the examples that have been considered so far, it has been supposed that the general causal relations were given, and attention was focused upon the particular cause. In example a, there are many possible causes of death; the medical examiner wants to know which one was present in this particular case. In example d, again, there are many possible causes of an airplane crash, but the investigators want to know which one was responsible for this particular crash.

One striking difference between examples f and g is the fact that the former has an obviously false general premise, whereas the general premise in the latter might well be true. We have reiterated many times that logic is concerned, not with the truth or falsity of the premises, but with the logical structure of the arguments. Nevertheless, it is clear that the reliability of such causal arguments depends upon the existence of causal relations of the sort described by the general causal premise. Given the enormous practical and theoretical importance of knowledge of such causal relations, we must consider the logical character of the kinds of arguments that can be used to establish them. The main purpose of many scientific investigations is to establish just such general causal relations. An interesting historical case illustrates the point.

| h] | During the years 1844-1848, Ignaz Semmelweis was a member of the medical staff of the First Maternity Division of the Vienna General Hospital. He was alarmed to discover that a high percentage of women who had their babies in that division became ill with a serious disease, known as "childbed fever," and many of them died. In 1844, 8.2 percent of the new mothers -- that is, 260 women -- died; in 1845, the death rate was 6.8 percent; and in 1846, 11.4 percent succumbed to the disease. In the Second Maternity Division, located next to the First, the death rate was surprisingly low by comparison -- 2.3 percent, 2.0 percent, and 2.7 percent for the corresponding years. After careful investigation, Semmelweis ascertained that the problem was connected with the fact that the women in the First Division -- unlike those in the Second -- were examined by medical students and physicians who came there directly from the autopsy room, where they had been performing dissections of cadavers. Although the germ theory of disease had not been established at that time, Semmelweis concluded that the disease was caused by the transfer of "putrid matter" on the hands of the examiners from the corpses to the mothers. He believed that the foul odor of this material was a sign of some harmful component, and he knew that chlorinated lime was commonly used to eliminate the odor of decaying matter. Thus he recommended a program of careful hand-washing in chlorinated lime (which, we know today, is a powerful disinfectant) for the examiners. When this program was instituted, the death rate declined to that of the Second Division. This is another case in which knowledge of a causal relation helped to prevent an undesirable effect.9 |

This example illustrates the fundamental distinction between the problem of ascertaining which particular cause was operative and the problem of establishing a general causal relation. In the case of the airplane crash, the investigators are aware of the fundamental physical relationship between the shape of the wing and the lift produced when it moves through air, as well as many other general causal relations that might have been involved. We may say that the general causal laws are known. In this case the problem is to find out which particular cause happened to be operative. In the case of childbed fever, in contrast, Semmelweis did not know any general causal relationship that would connect a particular fact with the effect that concerned him. In this situation it was the ascertainment of the general causal law that was needed.

It would be nice if, at this point, we could produce some rules for the discovery of general causal relations, but as we recall from section 3, Discovery and Justification, logic simply cannot provide any such thing. The best we can hope to do is to furnish methods for the evaluation of arguments designed to justify the claim that a general causal relation exists. A set of methods that serve this purpose is available.

9 A fascinating discussion of this example can be found in Carl G. Hempel, Philosophy of Natural Science (Englewood Cliffs, N.J.: Prentice-Hall, Inc. [Foundations of Philosophy Series], 1966), chap. 2.

The basic methods for establishing general causal relations that we shall study were propounded in the middle of the nineteenth century by the English philosopher John Stuart Mill. He offered five methods; we shall discuss four of them. They constitute an excellent point of departure for the topic with which we are concerned. After presenting them in their traditional forms, we shall suggest important modifications to bring them up to date.

The first is known as the method of agreement. Consider the following example:

| a] | Suppose you operate a fruit store, and you find that some oranges that look fine on the outside are pulpy and juiceless. You try to learn what causes this condition. You find that it sometimes happens to navel, Valencia, and other varieties of oranges, and sometimes not. You find that it sometimes happens to oranges that have been hauled by truck and sometimes to those that have been transported by train. It happens sometimes to those grown in Florida and sometimes to those from California. You have several different wholesale suppliers, and it sometimes happens to oranges that come from each of them. The one thing they always have in common is that they were subjected to freezing temperatures. You conclude that being frozen is the cause of the defect. |

The general idea behind Mill's method of agreement is this. There is a certain effect whose cause you wish to ascertain. You look for instances of this effect arising in as wide a variety of circumstances as you can find. In this case the effect is the dryness in the oranges. The variety of circumstances refers to the different varieties of oranges, the different modes of transport, the different suppliers, and the different states in which they were grown. The one thing these various cases have in common is the fact that they were subjected to freezing temperatures. We might schematize the method of agreement as follows:

| b] | ABCD → X

ABCE → X ABDF → X ACDG → X where X is the effect whose cause is sought, and A, B, C, D, E, F, G, are the various circumstances.Since A is the only circumstance common to all of the different instances, it is concluded that A is the cause of X. |

The method of agreement must be used with considerable caution. There is an old joke that illustrates the danger:

| c] | A man wants to find out what causes him to get drunk. One night he drinks scotch and soda, another night he drinks bourbon and soda, another night he drinks rye and soda, etc. He concludes that the soda is causing his intoxication. |

What is the problem? The use of Mill's methods (not just the method of agreement) requires us to make certain assumptions. In the first place, we presume that there is some cause of the phenomenon we are investigating, and that we have identified it as one of the items in our schematization. In example a, it was crucial that being subjected to freezing temperatures was among the factors considered. If it had not been included in the list, Mill's method of agreement could never have revealed it as the cause. This shows, incidentally, why Mill's method of agreement is not a method for discovering causes. We have to think of the various conditions that might be causally relevant. If we have included the item in the list, Mill's method of agreement could pick it out of the list of possible candidates. Moreover, as example c shows, causal factors do not come labeled in nature. If we do not realize that alcohol is a possible cause of intoxication and is contained in all different varieties of whiskies, the method of agreement cannot possibly locate the real cause.

Mill's second method is known as the method of difference. Consider the following example:

| d] | You wonder whether drinking coffee in the evening keeps you awake at night. In order to answer this question you perform a small experiment. On two successive Wednesdays you try to do everything the same except for one thing. You attend the same classes during the day, you have exactly the same meals, you do the same amount of studying in the evening, and you watch the same television shows. On one of these two days you have several cups of coffee after dinner, but on the other you cut out the evening coffee. On the night following the evening coffee drinking you have a great deal of trouble getting to sleep, but on the night after omitting the evening coffee you fall asleep immediately after going to bed. You conclude that drinking coffee in the evening does interfere with your sleep. |

The basic idea behind the method of difference is this: We seek the cause of a particular phenomenon -- in this case sleeplessness. We try to find, or we deliberately set up, two similar situations -- one in which the effect is present, the other in which the effect is absent. Suppose it turns out that all factors, except one that might be causally relevant to the effect in question, are the same in both cases, but that one factor is present in the case in which the effect occurs and absent in the case in which the effect does not occur. Under these circumstances, we conclude that the factor that is present in the one case and absent in the other is a cause of the phenomenon under investigation. The method of difference is so called because the factor present in the one case and absent in the other makes the difference between the occurrence and nonoccurrence of the effect.

The method of difference can be represented by the following schema:

| e] | ABCD → X

(not-A) BCD → not-X |

If this schema is applied to example d, "X" stands for sleeplessness, "A" represents the consumption of coffee in the evening, and "B," "C," and "D" stand for such factors as attending the same classes during the day, having the same food to eat during the day, and spending the evening engaged in the same activities. The conclusion is that drinking coffee in the evening does cause a problem in going to sleep at night.

Before going on to consider the next of Mill's methods, it will be worthwhile to pause for a brief comparison of the methods of agreement and difference. The method of agreement should seem rather familiar, for it is very much like induction by enumeration (section 20). We notice that in many cases A is iollowed by X, and we conclude inductively that in all cases, A will be followed by X. The fact that we insist that other factors such as B, C, D, . . . must be varied from one case to another is designed to avoid committing the fallacy of biased statistics (section 22). We say, roughly, that in a large number of instances found in a wide variety of different circumstances A has, without exception, been followed by X; therefore, in all likelihood, future instances of A will be followed by instances of X.

The method of difference does not seem to resemble the method of agreement at all closely. When we apply the method of difference, we show that B, C, D, . . . cannot be causes of X, for those factors can be present even when X is absent. Therefore, we reason, neither B nor C nor D can produce X. This method of approaching causal relations is often called induction by elimination. Neither the evening's study and television, for example, nor the food you had for dinner are the causes of difficulty in going to sleep, for that problem was absent on one of the nights when those factors were present. The elimination of B, C, D, . . . can be regarded as deductive, for we have counterexamples to the statements that B is always followed by X, C is always followed by X, etc. It does not follow deductively that A is always followed by X. The use of the method of difference, like the use of the method of agreement, requires us to suppose that the phenomenon we are investigating has a cause, and that the cause has been included as one of the items represented in the schema. At best, then, A is only established inductively as the causal factor we were seeking.

Toward the end of section 11, we defined the concepts of sufficient condition and necessary condition. We can introduce an analogous (but different -- they must not be confused) distinction between sufficient cause and necessary cause. If A always produces X, we say that A is a sufficient cause of X. If exposure to freezing temperatures always results in pulpy juiceless oranges, then exposure to freezing temperatures is a sufficient cause of pulpy juicelessness. We say that A is a necessary cause of X if X cannot occur in the absence of A. Having an adequate supply of water is a necessary cause for the growth of high-quality oranges; if there is not enough water, the oranges will not be good. Indeed, as we can see from this latter consideration, exposure to freezing temperatures is not a necessary cause of pulpy juicelessness, for that same effect can be a result of an inadequate amount of water.

In many everyday situations, what we would normally regard as a cause is a necessary cause. Turning the switch is necessary to turn on your desk lamp, for example, but it certainly is not sufficient. Turning the switch will not make the light go on if the bulb has burnt out or if the lamp is not plugged in.

It is very easy to see that not all sufficient conditions are sufficient causes, and not all necessary conditions are necessary causes. When we defined sufficient conditions, it will be recalled, we simply used the material conditional p ⊃ q (p. 47), which is true in all cases except that in which p is true and q is false. The material conditional "If Mars is a planet, then grass is green" is true -- Mars being a planet is a sufficient condition of grass being green -- but by no stretch of the imagination could it be considered a sufficient cause. In a parallel fashion, we defined necessary conditions in terms of the material conditional ~p ⊃ ~q. The material conditional "If coal is not black, then Alaska is not a state" is true -- coal being black is a necessary condition of Alaska being a state -- but it obviously is not a necessary cause. There is no causal connection whatever between the motion of Mars and the color of grass, and none between the color of coal and the statehood of Alaska. No one would be likely to become confused in such cases.

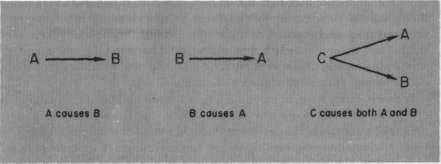

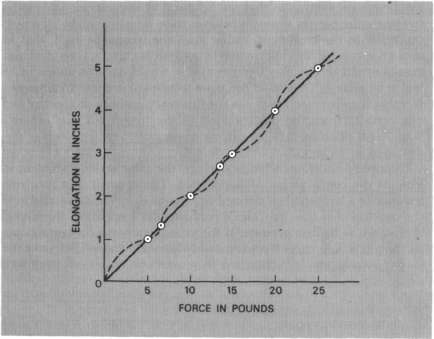

If we are talking about cause-effect relations, it is particularly important to be clear about the distinction between necessary and sufficient conditions on the one hand and necessary and sufficient causes on the other. If, for example, lighting a fuse is a sufficient cause of the explosion of a bomb, then it is also a sufficient condition for that explosion. Because of the relation of contraposition (p. 24), if p is a sufficient condition of q, then q is a necessary condition of p. You should recall the definition of contraposition and make sure you understand why this relationship between sufficient and necessary conditions holds. By virtue of this fact, then, the explosion of the bomb is a necessary condition of the lighting of the fuse. But the explosion certainly cannot be considered any kind of cause of the lighting of the fuse; it is, instead, an effect. Similarly, if planting seeds in the spring is a necessary cause of harvesting corn in July, it is also a necessary condition. But the corn harvested in July is surely not a sufficient cause of the seed being sewn in April.